Nov 30, 2025

AI agents now sit between users and websites.

They research products, summarize options, answer questions, and increasingly make purchasing decisions on behalf of users. This is no longer experimental behavior. It is already visible in search, commerce, and support flows.

Yet most teams are still relying on traditional analytics stacks that were designed to track human clicks, sessions, and pageviews.

That mismatch creates a serious problem.

If AI agents cannot be seen, measured, or understood in your analytics, you are making growth, product, and content decisions with incomplete data. In some cases, you are optimizing for a channel that is no longer the primary decision-maker.

This article explains why traditional analytics break down in an agent-driven environment, what teams are missing today, and how to adapt measurement for AI-mediated discovery and commerce.

The Shift Analytics Teams Are Missing

AI agents do not behave like users

Traditional analytics assumes:

A human loads a page

A browser executes JavaScript

Events fire inside a session

Attribution flows through referrers and UTM parameters

AI agents do none of this reliably.

They retrieve content server-side.

They summarize rather than browse.

They rarely execute client-side scripts.

They often mask or collapse referrer data.

From the perspective of tools like Google Analytics, much of this activity either appears as low-quality bot traffic or does not appear at all.

That does not mean it is irrelevant traffic. It means your measurement layer is outdated.

Why Google Analytics Cannot See AI Agents Clearly

Client-side instrumentation fails first

Google Analytics relies heavily on browser-based execution. AI agents increasingly fetch content directly from servers or caches.

No browser means:

No JavaScript execution

No session construction

No meaningful event tracking

From an analytics standpoint, this traffic effectively disappears.

Attribution collapses under agent mediation

When a user asks an AI system a question and receives an answer, the decision is often made before any click happens.

If a click does occur, it may come from:

An anonymized or generic referrer

A shared cache

A proxy with stripped headers

Your analytics may record a visit, but it will not reflect the influence that agent had on the decision.

This breaks traditional attribution models at a structural level.

AI agents compress intent, not journeys

Humans explore.

AI agents compress.

They evaluate multiple sources, extract the most relevant information, and output a synthesized answer. The journey happens off-site.

Analytics tools built around funnel steps and behavioral paths cannot observe this process. They only see the residue, if anything at all.

The Strategic Cost of Analytics Blindness

Teams optimize for what they can see

If your dashboards only reflect human traffic, your roadmap will bias toward:

Click optimization

UI-level conversion tweaks

Content that performs well for scanners, not extractors

Meanwhile, AI agents may be selecting competitors because their content is easier to parse, summarize, and trust.

You lose influence without seeing the loss.

AI agents become silent gatekeepers

In agent-mediated discovery, visibility is binary.

Either the agent can confidently extract and reason about your offering, or it cannot.

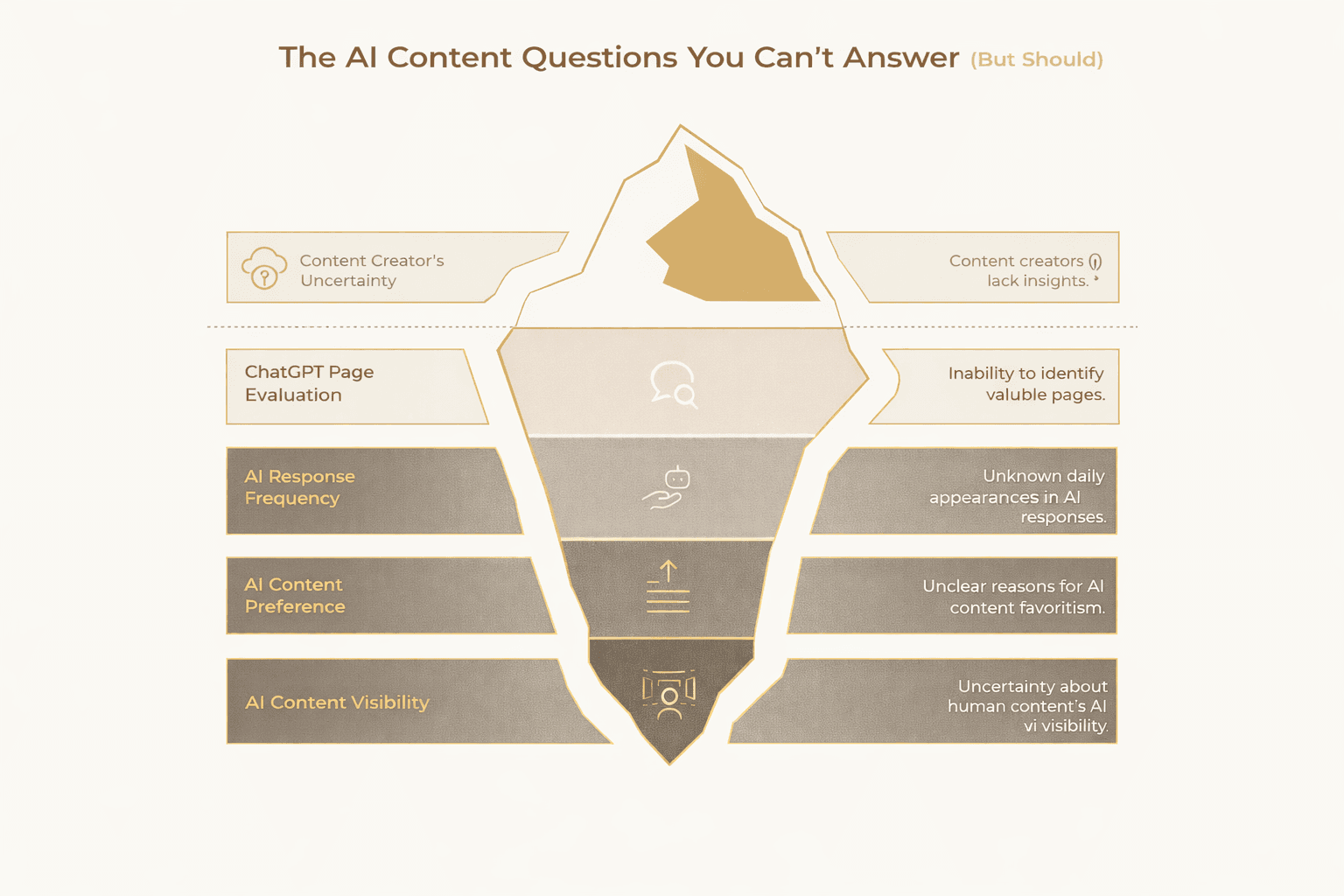

Analytics blindness means you do not know:

Which pages agents access

What content they extract

Where they fail to understand your value

That is not a marketing problem. It is an infrastructure problem.

What Analytics Must Do in an Agentic World

Measurement shifts from sessions to signals

The goal is no longer tracking behavior flows.

The goal is understanding:

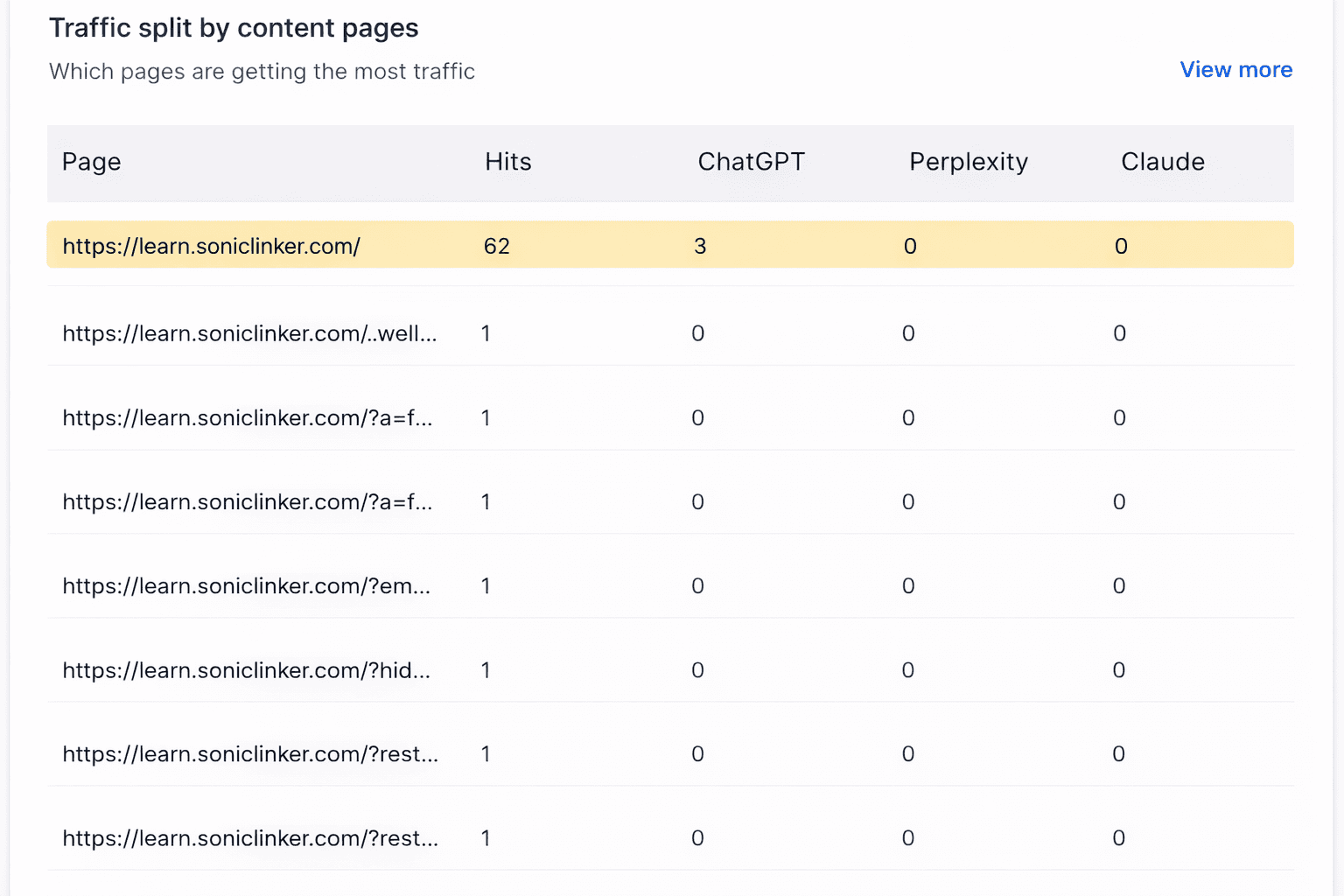

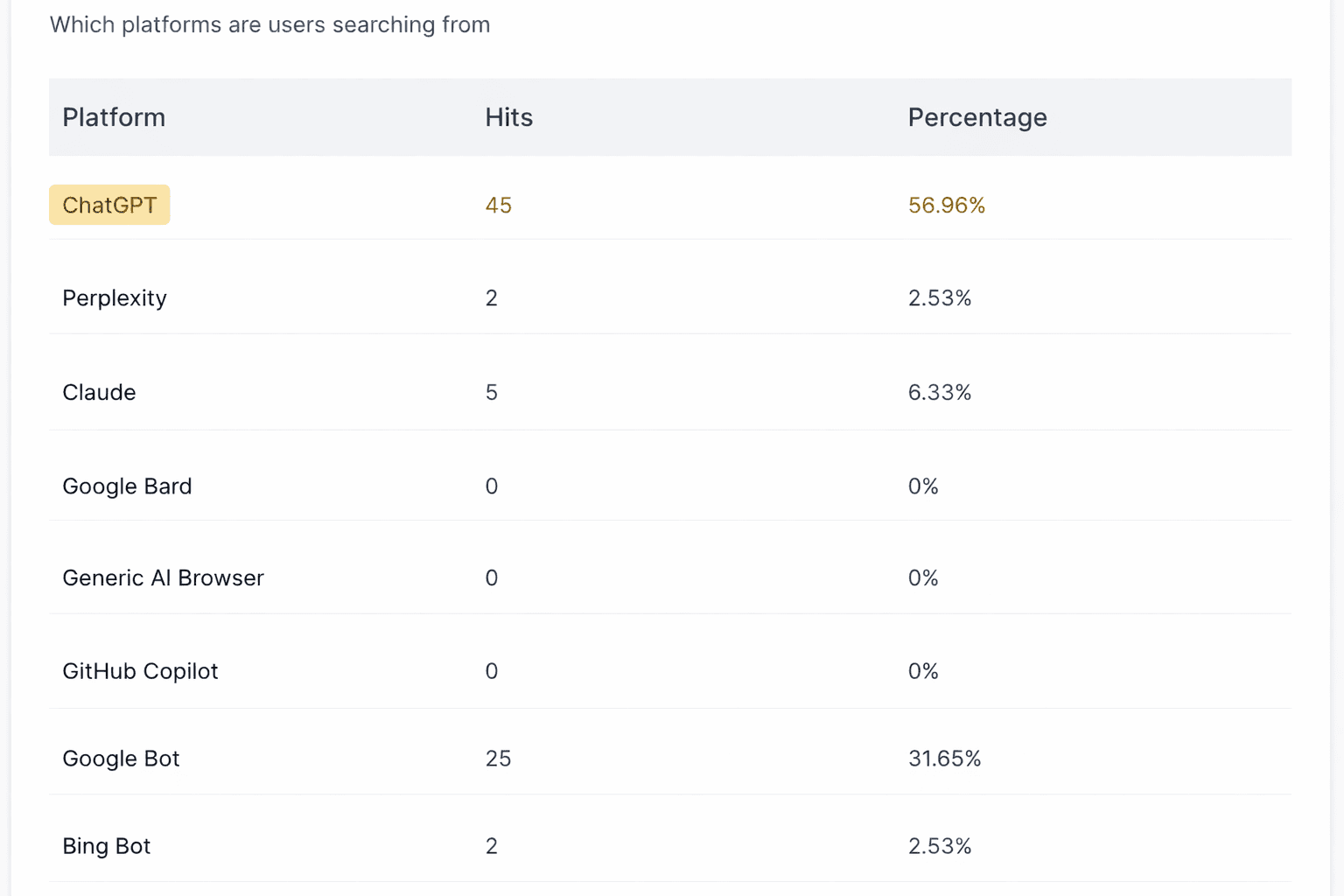

Which AI systems access your content

What content is retrieved most often

Whether critical product, pricing, and policy information is machine-readable

This requires server-level visibility and log-based analytics, not just client-side scripts.

Content must be evaluated for extractability

Analytics needs to answer new questions:

Can an AI agent summarize this page correctly?

Are key facts explicit or implied?

Does structured data reinforce claims?

These are not UX questions. They are AI comprehension questions.

Visibility replaces traffic as the leading indicator

In many cases, the first sign of success will not be traffic growth.

It will be:

Inclusion in AI answers

Accurate brand representation

Correct product and policy descriptions

Analytics systems must be able to detect and validate these signals.

Strategic Implications for Product and Growth Teams

If you ignore this shift:

Your analytics will increasingly misrepresent reality

Your optimization efforts will drift away from actual decision-making layers

Competitors who adapt earlier will shape AI-generated narratives by default

Teams that adapt will gain leverage not by gaming algorithms, but by making their offerings legible to machines that now act as intermediaries.

This is a structural advantage, not a tactic.

Practical Execution: What Teams Should Do Next

Audit server logs for AI agent activity

Identify known agent user agents and request patterns. Treat them as a first-class audience.Map critical pages for machine readability

Product pages, pricing, policies, and FAQs must be explicit, structured, and self-contained.Separate human UX optimization from AI extractability

These are related but not identical goals. Design for both intentionally.Introduce AI visibility metrics

Track inclusion, accuracy, and consistency in AI-generated answers where possible.Rethink analytics ownership

This is not just marketing analytics. It touches product, infra, and data teams.

AI agents are already shaping how decisions are made.

Analytics systems that cannot see or interpret agent behavior create false confidence and misaligned priorities. Google Analytics was built for a different era of the web.

The teams that win next will not be those with better dashboards, but those who redesign measurement around how decisions now happen.

Visibility, extractability, and machine comprehension are becoming growth primitives.